Optimization for Machine Learning

Image source: Mateo Gallo

Image source: Mateo Gallo

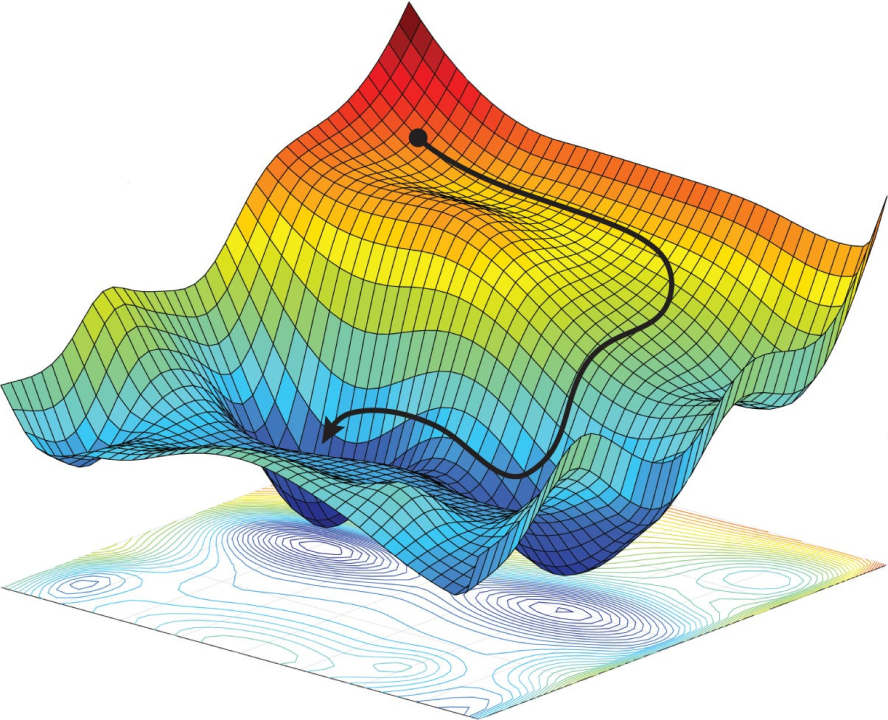

Many problems in statistical learning consist of finding the best set of parameters or the best functions given some data. These are estimation problems.

These problems, encountered almost everywhere in Machine Learning and Deep Learning, can easily be formulated as

optimization problems. These formulations help to understand the performance of learning algorithms.

In this course, classical convex optimization theory was presented. Classical gradient methods and their variants were discussed, as well as variance-reduced methods and

accelerated gradient methods Read more.

The purpose of the course was to present the theoretical formulation of convex problems and the asymptotic behaviors of (Stochastic) Gradient methods. For instance, it was shown that

although Stochastic Gradient Descent (SGD) is efficient—because it does not make use of the full dataset—it never converges to the optimal solution without restrictive assumptions. We also implemented these algorithms from scratch and worked on theoretical exercises.

Please refer to

the course material.

The course was given by Dr. Lionel Tondji,

a former student of AMMI. Find the goodbye photos here.

Assignments

Keywords

Convex and non-convex Optimization, Gradient Descent, Stochastic Gradient Descent, Proximal Gradient Descent,Accelerated Gradient Descent,Variance-Reduced Methods

Selected photos of the last day of the lecture.